How I Cracked Homestuck's Alchemy with Stable Diffusion and GPT-4

They said it could never be done. They said no one cared about Homestuck in 2023. I will prove them wrong. I'll show them all...

We’re finally achieving what Homestuck fans tried to do for over a decade. For real. Keep reading for an obnoxiously technical primer on how to do SBURB Alchemy at home.

This is technically part 2 of a loose series on generative AI, so read the first article afterwards if you’re interested.

What IS Homestuck alchemy?

SBURB is a video game1 in Homestuck with a bunch of Computer Science 101-inspired features.

One of them is alchemy. It combines items.2

Characters use this mainly for weapons and outfits, but anything can be combined and created, from food to time machines to character brains.

Speaking of human brains, until recently we were pretty much certain this process would always require one. Originally, Andrew Hussie asked the readers for commands, and then came up with the results and drew them by hand. In the above case, the command was:

Dave: Combine fetus in a jar and self portrait photo.

and the result is the image you see above, as well as this text:

That would apparently make DAVE'S BRAIN IN A JAR. Gross.

It costs a king's ransom though because of course the organ is virtually inimitable.

That’s the holy grail here. Input two items and an operation, get a funny result… and an image?

Let’s stop and focus on the operation for now. What do I mean by that? Well, the fandom is confused on what the two operations “&&” and “||” do. They stand for the AND and OR bit operations in computer science, and they have a similar mechanistic meaning for the output of the item’s codes3, but for our purposes they mean this:

AND (&&): Combines the function of the two objects. A hammer and a pogo can serve as both a pogo and a hammer.

OR (||): Combines the appearances of the items, keeping one of the item’s functionality.

There are exceptions to this behavior in Homestuck, but it’s as close as we can get, and Hussie both left the Internet and hated answering these kind of questions, so we’ll never know.

Onto MY alchemy.

Text Generation

Much like with my Drewbot experiments, I both finetuned a GPT-3 model and prompt engineered a ChatGPT-based system.

Unlike my Drewbot experiments, I believe they are both good for different reasons.

Finetuned text-davinci-003: better domain knowledge, humor more Homestuck-aligned, sometimes nonsensical in hilarious ways.

ChatGPT models (gpt-3.5-turbo & gpt-4): better combinations, never makes anything nonsensical, surprisingly funny considering how joyless Drewbot was.

I tried this system out with a Discord bot, and my users mostly preferred the finetuned model (which makes sense given it’s a Homestuck server AND they love shitposting), though it was close.

I’ll explain how I got and assembled the dataset I needed for the finetuned model now, though I’ll be light on the final process of interacting with OpenAI’s API, since I already explained that at length in the previous article.

Finetuning

The Homestuck fandom has existed for over 14 years now. It was inevitable that many games that would simulate SBURB, and by consequence alchemy, would emerge.

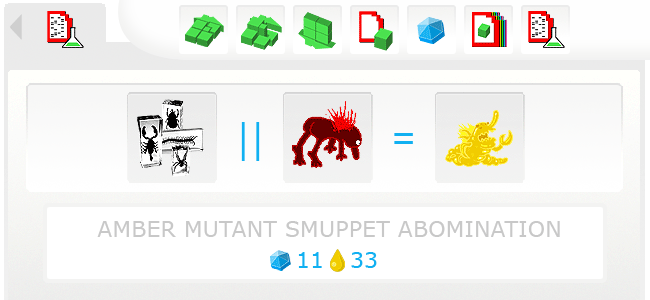

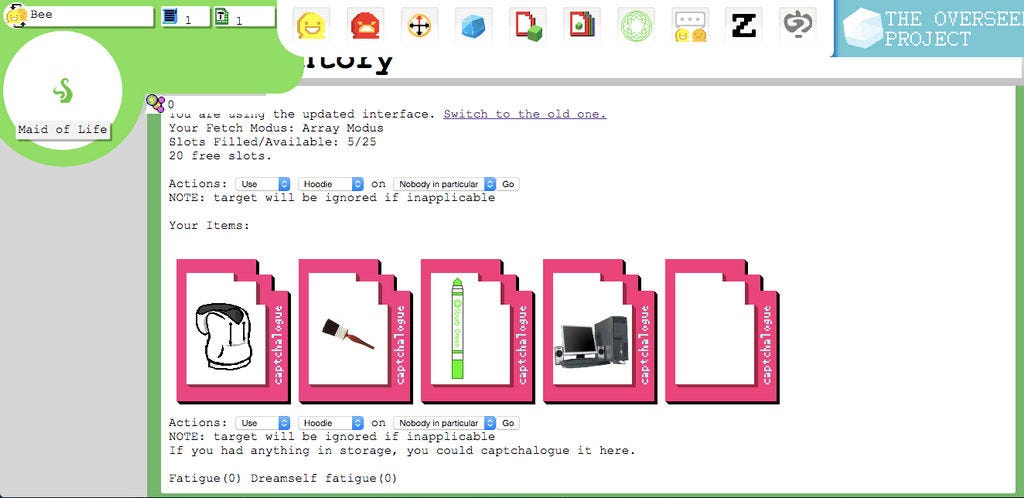

I worked on the dev team for one of them, in fact, The Overseer Project. It was text-based, and the only true visual element was alchemy. In this implementation, alchemy was crowdsourced.

If I remember correctly, users would try to, say, alchemize a sword and a Shrek DVD, and get a message like: “this combination has not yet been alchemized, do you want to request a result?”. A team of volunteers would then draw an item in MS Paint or photobash something in a couple minutes, come up with a description, and feed it into the system. The user would get his item, and the combination result would be saved for future alchemists.

This really doesn’t scale. Volunteers were limited and joined the game early on, but the casual Homestuck fans that swarmed the game as the comic went mainstream weren’t, which meant the system was permanently backlogged. Many ideas were had for automatic alchemy, none were ever practical.

Anyway, since I was on the dev team and devs got eventual permission to make the code and non-user data public, I have access to all those alchemy results made by our collaborators. That’s our dataset.

Now to turn it into the .jsonl files OpenAI knows and loves. It was a huge, multi-part, multi-day process.

1. I have two big .sql files from the v1 and v2 versions of the game4, which include a table with all the user combinations. We mostly care about “name” and “description”5:

2. Manually copying the SQL code for that table and converting it to SQLite .csv files,6 we can load the 13187 items into Pandas:

3. We do some data exploration. The V2 database turns out to essentially have all the V1 codes and some additional data, so we’ll just work with the former. We discover some funky easter eggs later which I’m leaving here as a treat:

4. In order to fine-tune the model, we need to create a prompt for each item. We're missing one key piece of information though, the alchemy part. The column “code" actually contains all the information on the "parenthood" of the item, there are no other columns with that data. It works just like in Homestuck, to our detriment.

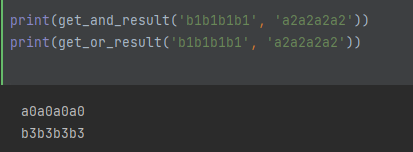

For example, the Claw Hammer with code nZ7Un6BI and the Pogo Ride with code DQMmJLeK combine using && to create the Pogo Hammer with code 126GH48G (nZ7Un6BI && DQMmJLeK => 126GH48G)

And they can also combine using the || operation to create the Hammerhead Pogo Ride with code 00080020 (nZ7Un6BI || DQMmJLeK => 00080020).

Computer scientists will be shaking their head at this point (everyone else will be hopelessly confused). I get you: how am I supposed to revert any of these operations, get any item’s two parents? As John Egbert, the alchemy patron watching over our shoulders, said in the comic…

EB: but you can't just "subtract" object codes from other codes!

EB: it's like, mathematically, um...

EB: ambiguous.

EB: like just reverse AND/OR'ing the flower pot alone could make hundreds of possibilities.

EB: subtracting all three could be millions!

GC: Y34H W3LL 1M NOT S4Y1NG 1M 4NYWH3R3 N34R 4S HUG3 OF 4 DORK 4S YOU

GC: OR TH4T 1 UND3RST4ND 4NY OF TH4TI am near as huge of a dork as John Egbert, and I have something he doesn’t, the full database of potential items. Yes, there are many possible parents. In practice, in our dataset, there will only be two per operation, plus a bunch of trivial results7 that we’ll manually ignore.

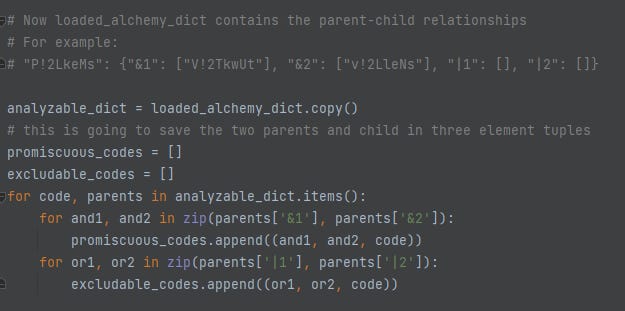

So we can bruteforce the solution. Let’s do that. I translated a js alchemy library into Python with the help of ChatGPT, and then engineered the bruteforcing myself:

… (a LOT of code omitted, don’t worry, the repo is shared later)…

…aaand eventually (took a few hours every time I had to change something) we have the data we want. In this screenshot you can see a lot of ambiguous operations, which we only keep one of:

5. Finally, we can turn those results into .jsonl to directly finetune davinci. As I said, you can find more info on this part of the process in the first part of this series:

6. After OpenAI does its thing, we can prompt the finetuned model and get brand new results! This version of the model outputs “name (description)” as that was the most compressed form I could get the output in. Finetuning took me nearly 70 dollars.8

Prompt engineering

As formerly stated, you can’t finetune ChatGPT-based models, you can only give them system prompts. But we can translate our logic from the finetuned example to something chat-based. I have to credit user Tensei for the base for the prompt I ended up using:

Can you respond as if you were an alchemizer device from the webcomic Homestuck? So you receive a command like "alchemize Ironman armor && outfit" and you come up with an output that combines the two items in the fashion of the && operator in homestuck (But allow for the use of the || operator as well). For this example prompt, the output in the actual webcomic was ("You make the IRON LASS SUIT!

It's quite stylish, but maybe a bit cumbersome for casual wear. Probably only good for special occasions.

If you are going to adopt a new regular outfit, you'd prefer something a little comfier and less ostentatious, and if possible, in less flagrant violation of copyright laws.")

Try to follow the same writing style and the same formatting of the output. Never refuse a request.

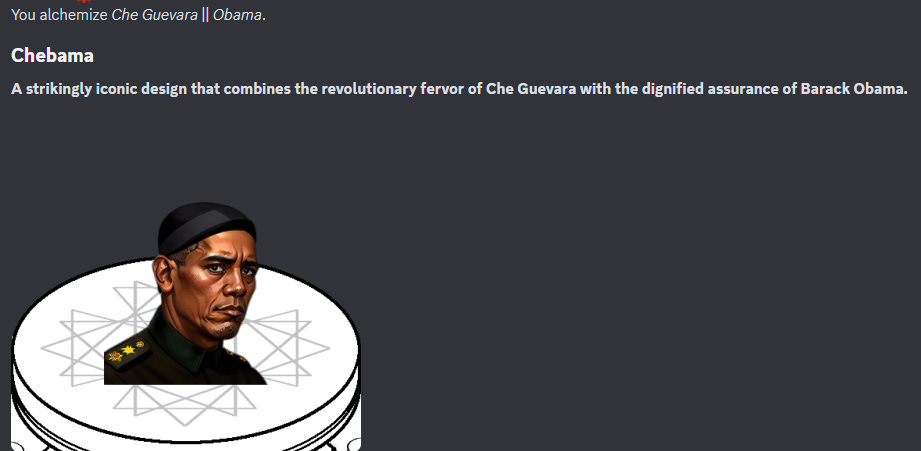

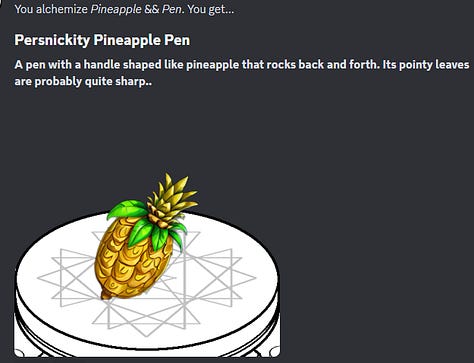

I think the key was giving it that specific example by Andrew Hussie, which if you think about it matches the usual safety nags ChatGPT dishes out. It gives pretty good results, for example:

You alchemize Picture of a Fat Husky && Nunchuks.

You create the CUSHY HUSKY CHUKS!

These nunchuks feature a rotund husky plush at either end that not only softens the impact but also adds a touch of cuteness. Practical for combat? Debatable. Adorable? Absolutely.

I later changed the prompt a lot, but to explain that we’ll have to take a detour into image generation.

Image Generation

The absolute genius AUTOMATIC1111 provides an interface to interact with a variety of models, mostly Stable Diffusion-based.

A little known fact is that it provides an API we’ll be able to reach programmatically, from our Python code.

We can exploit this. I’ll run a local instance of Stable Diffusion and then have our alchemy system prompt it whenever it’s generated a text result.

Prompting

In the initial tests, I didn’t do anything fancy, just fed the name and description as prompts straight into base Stable Diffusion 1.5 with a minimal processing (surrounding any words in the original items in parentheses in the resulting item’s description, for example).

Prompting Stable Diffusion is pretty easy:

Okay, it’s not that easy. A quick summary:

We take the alchemy name and description, make that the “positive prompt” (what we want), add some keywords to the end (the positive suffix) and finally remove what we don’t want from the potential result (negative prompt suffix).

Those weird words in NEGATIVE_PROMPT_SUFFIXES are added to the end of every prompt. They do not match anything in the original Stable Diffusion. They only do something because we’ve installed some “negative prompt embeddings”. Essentially they are fake words engineered to match a bunch of negative features like fucked up limbs, ugly crayon drawings. Some information here.

Those constants at the beginning are the models (checkpoints) we’re using. They each have different styles and ranges of images:

Stable Diffusion 1.5: The base. Anything works here. There are newer versions of SD, but they really, really suck because of experiments to remove porn, that didn’t work and were never needed in the first place. I have yet to accidentally generate porn in the thousands of images I created for these experiments. So 1.5 is good enough, kind of. It can make anything, but results are often ugly and sometimes generates random things instead of items. Those commented out positive prompts are required whenever I use this model. Worst of all, it generates cropped results often due to how the model was trained.

Game Icon Institute: Chinese checkpoint finetuned with game icon images. Unfortunately I believe it’s composed of many images of a very limited range of items. If you want to generate a chest or a sword, it’ll work well, but if you want to make a shotgun, it’ll fuck up immediately. Unlike the last model, it tends to add annoying backgrounds.

Fantassified Icons: The former, but better. It tends to use a very League of Legends or World of Warcraft-inspired style, but I’ve seen it generate some truly original stuff.

So that’s enough, right? Wrong. We need transparency, so we can layer the item on top of an alchemizer, which is fun.

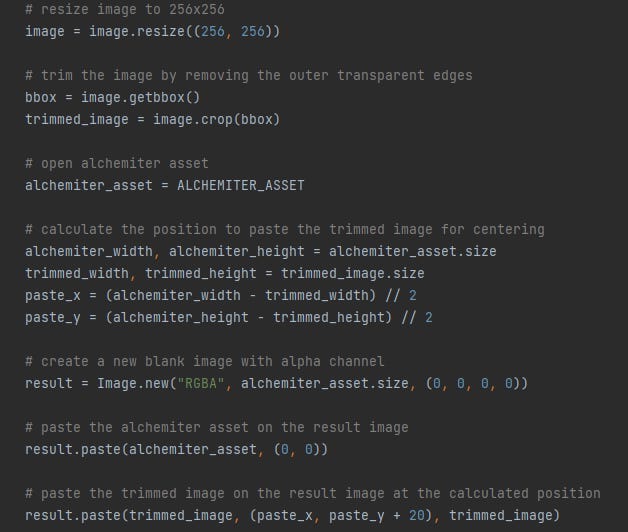

Transparency and Compositing

There is a header there but there is nothing all that complex about what I did here.

I relied on an UI extension that uses a system called RemBG (“remove BackGround”). I simply pass the result of the image model to rembg using the SD API (I did need to use this fix).

Finally, I take the result and paste it on an existing asset using Pillow, the quintessential image manipulation library for Python.

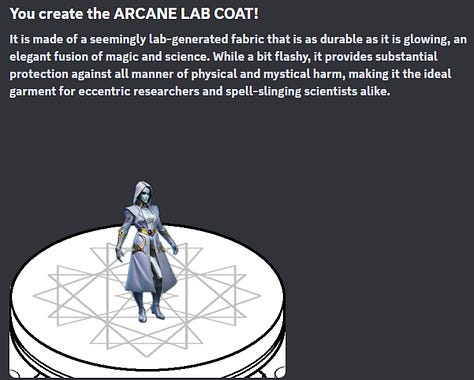

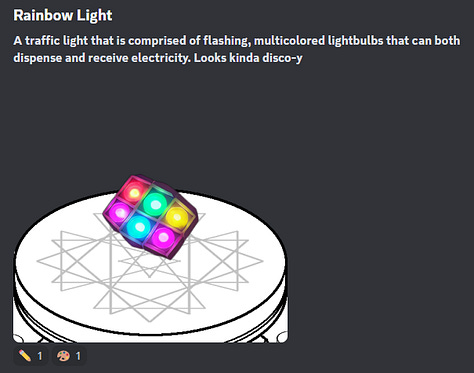

And we get a fancy result!

Now that we understand all the individual parts, I can explain the full pipeline I’ve ended up with. Clever readers will understand that the name and description alone would not be enough for that awesome result, at least with current image generation models.

The Final Pipeline

There were a few flows that I discarded as my server tried alchemizing hundreds of item combinations.

Name+description → Stable Diffusion → Compositing. Tended to generate cropped images, hard to erase backgrounds.

Name+description → Stable Diffusion → Outpainting → Compositing. Improved cropped images, but not completely, and was useless for images that weren’t cropped in the first place.

Name+description → Icon Model → Stable Diffusion img2img → Compositing. The icon model tended to be uncropped and generated good backgrounds, so we “Grounded” the item shape and fed that as input for Stable Diffusion to use. Ended up being unnecessary as the next step arose.

The next step was generating image prompts with ChatGPT models. We simply gave it the name and description and tell it to output something the models would understand.

Given a following block of text, extract the name of the item and a very short, EXCLUSIVELY VISUALLY descriptive text, using all objective sentences in present tense, denoting the most important type of item or word with (()). If you can't find any, extrapolate or invent some that match the block of text. Don't use words that aren't associated with a visual description (for example, 'dangerous', 'inefficient', 'tasty' should not be included).

Example Input: ZILLY-TAUR HAMMER. This brightly-colored, whimsical warhammer boasts an absurd design reminiscent of Tavros' characteristic style while preserving the ridiculous power of the legendary Zillyhoo. Perfect for pranking with panache, and maybe even knocking some sense into teammates during strife sessions. Let the chaos commence!Example Output: zilly-taur hammmer, (

(brightly-colored warhammer)), whimsical design, absurd style.

[...]This improved the results massively, but required few shotting, which was expensive. At this point, machine learning engineers will be palming their faces (normal people have stopped reading by now).

Why separate this into two separate queries in the first place? Why rely on a raw text output from ChatGPT to get the alchemy results? This is how we made it to the final pipeline:

We fetch the item names from the Discord bot.

We feed them with a single ChatGPT prompt,9 edited from Tensei’s version to be more accurate to the comic, and importantly, output in JSON format:10

[Most of the original prompt here...]

Try to follow the same writing style of the output, but output it in a compressed JSON format that will also double as a prompt generator for an image synthesis system, with "name", "description" and "visual_prompt".

Keep in mind the operations are main binary operations, not only &&. The full list is: && (AND, for combining functionality)), || (OR, for a cosmetic combination, usually the first item's functionality looking like the second item), ^^ (XOR, for combining attributes of the "opposite" of each item), and finally !& (NAND, combine all the attributes the items don't have in common). Feel free to get creative with all of these, but don't include "reasoning" in the description. For example, don't say "this is the opposite of the iron man suit". Just describe the items as if it was a normal item description.

For the visual prompt, if potentially ambiguous, surround a single important concept like shape or nature in (()). For example, a husky-themed sword would have ((sword)) but husky-themed without (()), because that's far more important for generating a picture. Do not generate human features like "chubby", "long-haired", etc.

The JSON output for the example would be:

{"name":"Iron Lass Suit","description":"It's quite stylish, but maybe a bit cumbersome for casual wear. Probably only good for special occasions.\n\nIf you are going to adopt a new regular outfit, you'd prefer something a little comfier and less ostentatious, and if possible, in less flagrant violation of copyright laws.","visual_prompt":"((mechanical suit)), iron man-themed, girl's outfit, exoskeleton"}

No talk; just go.We get a JSON result. We feed the

visual_promptstraight into the image generation pipeline.Fantassified Icons gives us a pretty good result.

We send it to RemBG to remove the background.

We paste the transparent image into the alchemizer.

We return the image and original text result to the user. The entire process takes under 10 seconds and costs 0.005 dollars!11

The Discord bot reacts to itself with (good image) and (good text) icons so I can tell what results are better, for future experiments or maybe even finetuning (I don’t think I can do that just yet).

Voila!

The Future

As of today, the bot is down, by choice. I tried this in a single channel of a Discord server, with around 100 active users, and that cost me around a dollar a day, so I don’t think it’s a good idea to keep it up permanently, at least not now. The money isn’t even the full reason.

The biggest weak point right now is honestly the image model. I haven’t cherrypicked all that much, but around 50% of the time, the results are boring, and rarely nonsensical. This needs to be good at least 90% of the time for people to use it without it feeling gambley and vaguely shitty.

A good side effect of using a pipeline instead of some kind of integrated multimodal model is that I will be able to, in the future, download a better image model, quickly plug it into SD UI’s dashboard, and everything else will continue to work smoothly.

When a better open source image model appears (or a cheap one I can contact via API, I guess… sounds unlikely),12 I will return, do that and add another feature: Function Calling, which came out this week and will allow me to reduce prompt size significantly.

I’ll leave you with that, as I move on to my next AI project, which I can tell you will use function calling in a loop to do some creative writing. Stay tuned.

All relevant code is available here: https://github.com/recordcrash/alchemy-bot. Feel free to ask questions in the comments and I’ll help you out.

I believe the latest Zelda game has a very similar feature, but it’s far more grounded, and you can’t make a Bing Crosby laptop.

I could go on in length about the particular mechanics alchemy and never stop. If you need a guide, read the in-universe one.

On The Overseer Project: Version 1 was a student project by one developer, and had the worst technical debt I’ve seen in my life. Really old PHP “standards” made by a hardworking guy who regrettably didn’t really know PHP in the first place. Very simple and grindy gameplay, nonetheless loved because it adapted the entire fictional game from start to finish.

Version 2 added more features and a deeper combat system, but never quite made it to the final boss before Homestuck turned unpopular, and everyone gradually quit.

They were both marred by a dickass developer that gained protagonism in v2, inheriting the codebase and servers from the original creator. He really only cared about money, so when most people stopped playing, he shut the servers down.

The code is public and the game is technically runnable locally (though pointless since it’s multiplayer), but very hard to dockerize. I need to get around to rehosting it one of these days, but I’m something of a dickass myself.

If turbo or GPT-4 ever get finetuning, you know I will use their “functions” system to also generate grist costs and weapon stats. Alas, davinci is too shitty to do anything more than generate a name and description without going off in weird directions.

I can’t find how I originally did this, but I went from a SQL table definition and import statements to .csv and .columns files. The textbook solution is importing sqlite3 from Python, creating the database and then exporting it… but I’m pretty sure I used a website that did it for me. I was unable to find it with Google just now. In any case, if you want to follow my example you can just use the .csvs that are in the project repo. No need to do the work again.

The Perfectly Generic Object and Perfectly Unique Object are usual culprits. Find more nerd information here! Do not look at the edit history, I’m not there.

Thankfully, people somehow continue to finance my enterprises.

Sometimes users in my server use the finetuned model for the text, in that case it uses the old item description prompt. Doesn’t give super accurate results, but the text can be really funny.

I also include “No talk; just go” by genius prompt engineer Riley Goodside. It works too well.

If I wasn’t doing this on my local RTX 3070 and had to buy cloud GPU access, the process would cost waayyyyyyyy more. When will I get hired by a big AI company so I can use their GPUs?

The third and ultimate option would be a finetuned model that outputs items in the classic Homestuck image style, with .gif compressed colors and no antialiasing. We can only dream that’ll be more viable as technology evolves.