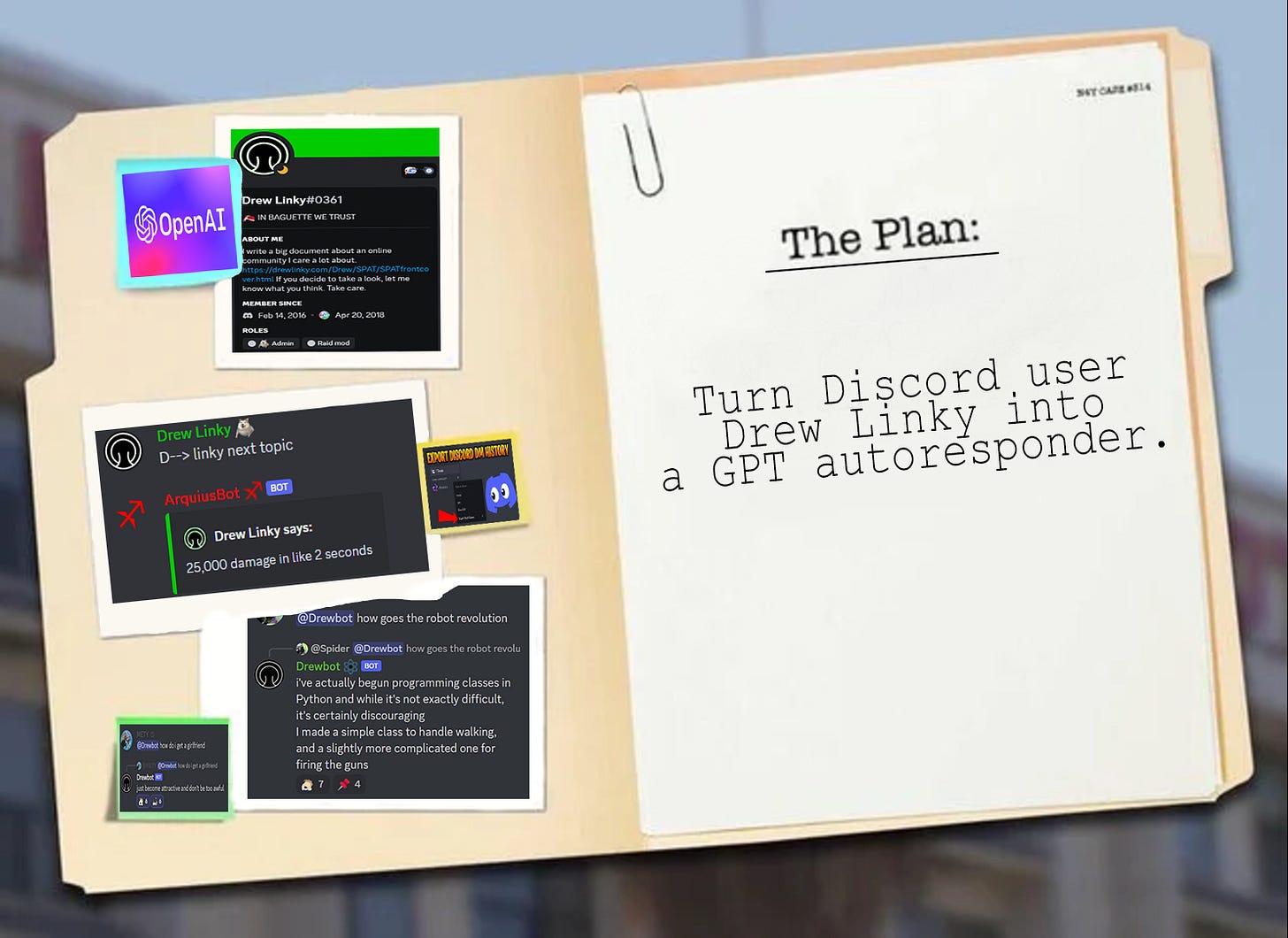

Golemizing the Nachlass: How I Turned My Friend Into A Chatbot

From a ludicrously large corpus of chat messages to an army of GPT-powered Discord bots.

Nachlass ([ˈnaːxlas], older spelling Nachlaß) is a German word, used in academia to describe the collection of manuscripts, notes, correspondence, and so on left behind when a scholar dies.

Introduction

Large Language Models are the future. But there are no related jobs around me, so I’m stuck doing boring old AI-less programming, like a loser.1

As it happens, I moderate a large (~20k daily messages) Homestuck2 Discord. I saw the opportunity to do some cool hobby shit the moment I saw businesses hurriedly and painstakingly assembling custom datasets from low quality sources like Common Crawl.

Well, I have a high quality dataset RIGHT HERE: real people talking to other real people. What could we do with it? If you’ve read the article’s title, you already know.

Disclaimers

I had permission from my test subject. You should probably get permission from yours too.

DiscordChatExporter would have been a way to get all this data while breaking Discord TOS. You’d need an API token and a bunch of other stuff. Definitely don’t do that. I just transcribed 1 million messages by hand in a machine format like God intended.

Nostalgebraist’s Autoresponder is a subconscious inspiration for this. Big fan of the guy and his bot, and really, if you want to be impressed, you’re better off reading his writeups.

The Execution

Finetuning Dataset

In order to turn our base GPT-3 clay into a Drew Linky-brand golem, we’re going to transfer some of Drew’s personality into a base model, GPT Davinci. This is what finetuning will do for us.

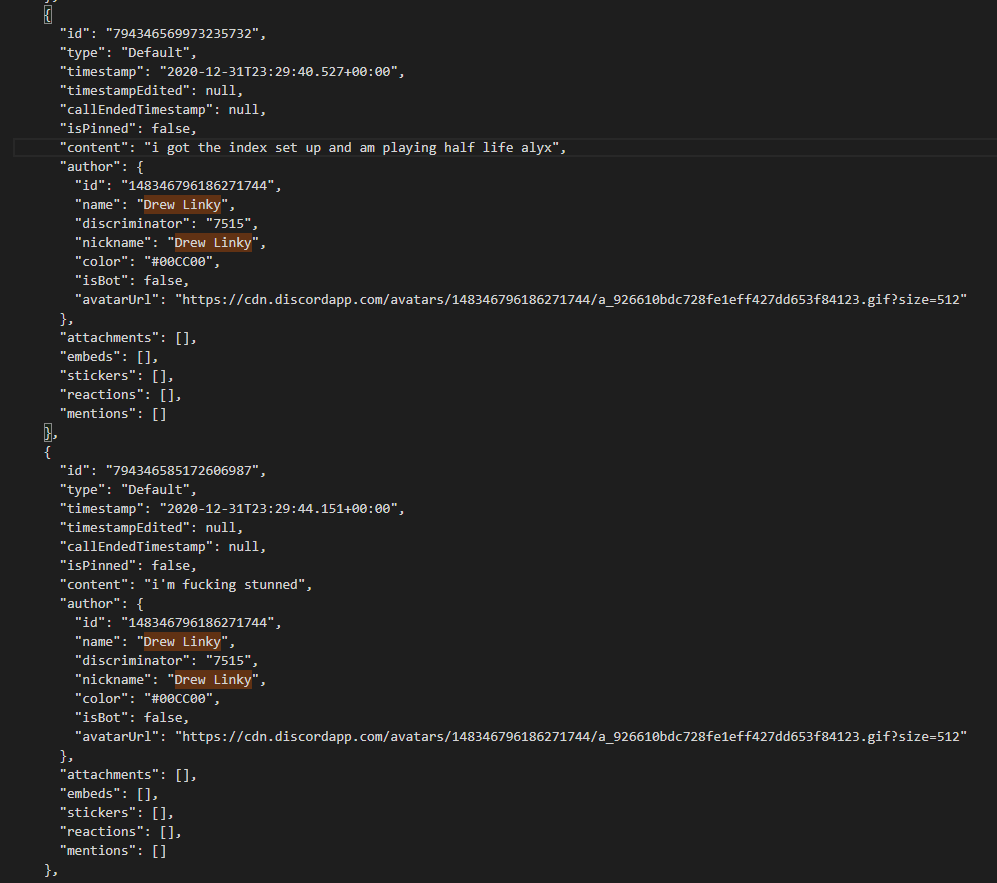

In our case, our datasets will be comprised of existing prompt/response pairs that teach the model how they should respond to brand new prompts. The way I collected the data3 left me with some 2 GB of Discord messages, with some metadata I can make use of. Still, I can't just directly plug everything into OpenAI's service.4

Here’s a sample of the full list, with two messages out of hundreds of thousands:

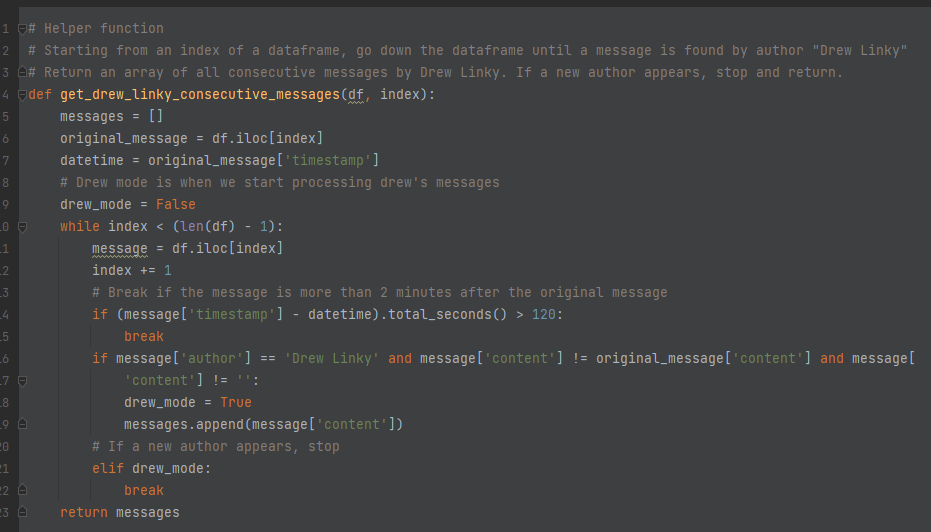

To turn this huge mess into something resembling usability, I used a simple algorithm:

Clean up the message contents so only the usable information remains, like removing “

Pinned message” messages and mentions.Search the list metadata with Pandas, looking for messages with a direct Drew Linky mention or ping. These messages will become the first part of the pair.

Starting from the list of Drew mentions, collect all Drew messages after each prompt in a small time window (2 minutes).

Concatenate all the messages if there’s more than one, separating the messages with

\nlinebreaks, but making them into a single response.Turn the new prompt/response pairs into OpenAI’s expected format.

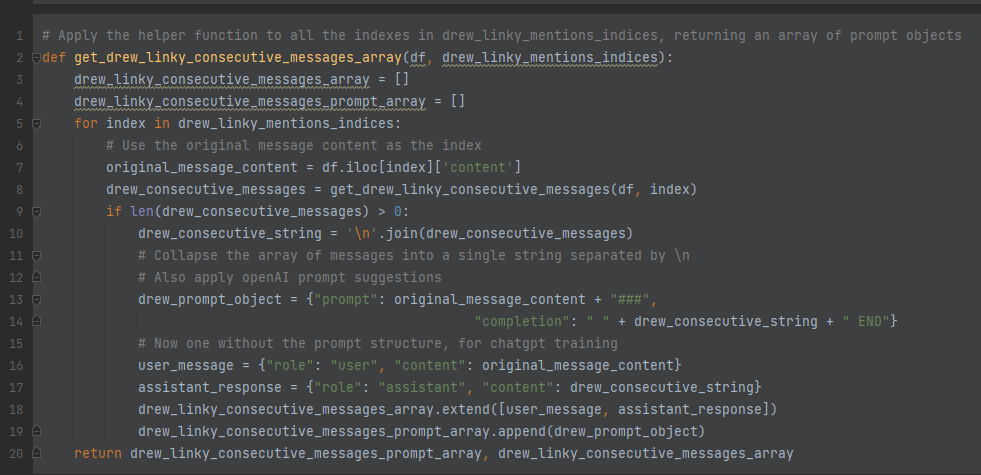

I made a full Jupyter notebook for this, but here are some excerpts of the process if you’re lazy.

I know, I could have generalized a lot of this, right now the entire notebook is built around a specific user. Maybe I’ll do it in the future.

All in all, I ended up with 7285 prompt/response pairs. Here’s a sample of the finished product, a .jsonl5 file:

{"prompt": "cool name change###", "completion": " you're welcome END"}

{"prompt": "didn't someone turn over halo to a team of people that hated it###", "completion": " no you're thinking of homestuck END"}

{"prompt": "I felt that###", "completion": " lmao END"}

{"prompt": "how does it feel###", "completion": " i mean, i kind of saw it coming END"}

{"prompt": "are you Dennis Prager###", "completion": " you wish END"}

The empty spaces, ### and END are all OpenAI recommendations, to make sure the model learns to tell apart prompts from responses in the context of text completions. They switched to a saner format for ChatGPT-related training… but you can’t finetune those models, which is dumb.

I explain a lot of things in the notebook, but for the full context, see what OpenAI has to say about finetuning.

Finetuning Process

This is pretty trivial. Now that we have a finished dataset, it’s time to upload it to OpenAI via the command line.

> env OPENAI_API_KEY="sk-whatever-key" openai api fine_tunes.create -t drew_linky_consecutive_messages.jsonl -m davinci --suffix "drew_linky"

> env OPENAI_API_KEY="sk-whatever-key" openai api fine_tunes.follow -i ft-whatever-model-idThis process took a couple hours,6 but fine tuning was pretty in vogue when I did it in February, so the servers might be less overloaded now. On the other hand, if the finetuning service is sharing machines with ChatGPT, you’re screwed.

When the process ends, you’ll get a human readable name for the model that you’ll be able to call from your account from now on. You’ll also be charged. For 901,256 trained tokens I was charged a bit under 30 dollars.

Simple Prompting

def query_openai_model(prompt: str):

response = openai.Completion.create(

model=MODEL_NAME,

prompt=prompt,

max_tokens=100,

stop=[" END"]

)

text = response.choices[0].text

# remove empty space at the beginning

processed_text = text[1:]

return processed_textPretty simple stuff. You’ll be charged, but it’ll be cheap, under a cent per request.7 Time to insert the Discord bot into the equation.

The Bot Wrapper

The Discord bot code is available here. It handles a bunch of additional things I’m not going to go over in detail, because they’re boring or irrelevant:

Logging messages and estimated cost in dollars.

Commands to turn off the bot, change it to ChatGPT mode, change temperature8, and more.

Jailbreaking wrappers for ChatGPT mode. It’s now against TOS and I don’t want to risk angering the owners of the best text model out there, so they’re disabled by default in the code.

Caching custom server emotes so the bot can use them at will.

Some basic security to prevent people from spamming the model. If you see the word “backoff”, it refers to the fact we also wait for the OpenAI API if it’s not ready or something fails on their end.

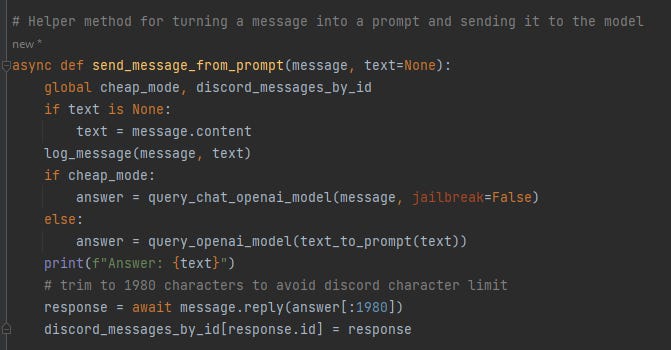

Beyond that, it’s simple. Here’s the brunt of it:

Trivial, right? Besides some accounting for Discord’s uniquenesses, this should be easy to follow:

The bot receives a message directed to it.

It converts the message to a OpenAI-safe prompt.

It contacts the OpenAI API with the finetuned model’s name and the prompt.

After a short time, it receives the response from OpenAI.

The bot converts the response to a Discord message.

The bot responds as Drewbot with the message contents.

And we’re done! Almost!

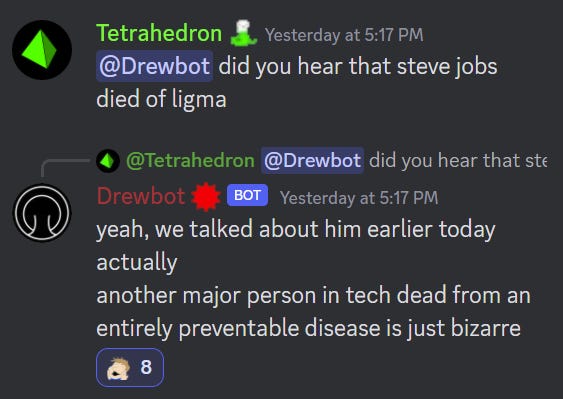

The Inevitable Censorship

As it often happens with open chat platforms, my users tried to get the bot to say funny words nigh instantly.

This version of GPT is actually pretty bad at avoiding slurs by default, and as Drewbot is built there is no realistic way to include “don’t say slurs” in the prompt. I had to simply (and VERY naively9) censor it myself after I got the response from the API.

# censored_words is a user set .env variable

censored_words = os.getenv("CENSORED_WORDS").split(",")

for word in censored_words.copy():

censored_words.append(word + "'s")

censored_words.append(word + "s")

censored_words.append(word.lower())

censored_words.append(word.upper())

censored_words.append(word.capitalize())

# [...]

# [...]

for word in censored_words:

# in my .env file, censor_character is set to the FatHusky emote

replacement_string = censor_character * len(word)

new_text = new_text.replace(word, replacement_string)

return new_text

To OpenAI’s credit, they don’t seem to care that my bot logs include like ten pages of people trying to get it to say the r-word.

Despite all of this, at certain temperatures the bot is sometimes incredibly and gratuitously misogynistic. My users theorize that the non-capitalized text reminds the model from 4chan training data. Or maybe Drew Linky secretly hates women. Your call.

Still, it fulfills its purpose perfectly as a gimmicky Discord bot for entertaining regulars.

NOW we’re done.

Possible improvements

Generalization

I already talked about this, but it’d sure be cool if I generalized my code so people could import their own datasets, select a user, and generate their own finetuned models.

Post a comment if you want this, I guess?

Sentiment Analysis

If you screw up like I did and your bot develops a rare toxic tendency, you could run responses through OpenAI’s classification API. If the response is labeled as negative or toxic, just pick the next potential response,10 or return an error to the user.

My server would kill me if I applied this. But you could do it.

Autoresponding

You could train Davinci on your own messages and make it respond whenever you’re away from your computer.

Unfortunately, Discord wants bot-generated content to come from bot accounts, and will stop you if you use the API to do this kind of thing.

You could ignore the terms of service altogether and manually send responses using the client, or something. I’m not particularly interested in this use case.

Multiple Users

I have one bot representing one user. But nothing says I can’t finetune an entire Discord channel into the model. This would allow me to represent many personalities at once, with the bot changing nicknames and avatars and talking to itself endlessly.

Unfortunately, the limiting factor here is money. It took 30 dollars to handle one user. Unless prices drop massively, it’s a pie in the sky.

Memory

Drewbot cannot remember anything. It will respond to a message, and every reply to that response will be treated like it’s the first interaction the bot has ever seen.

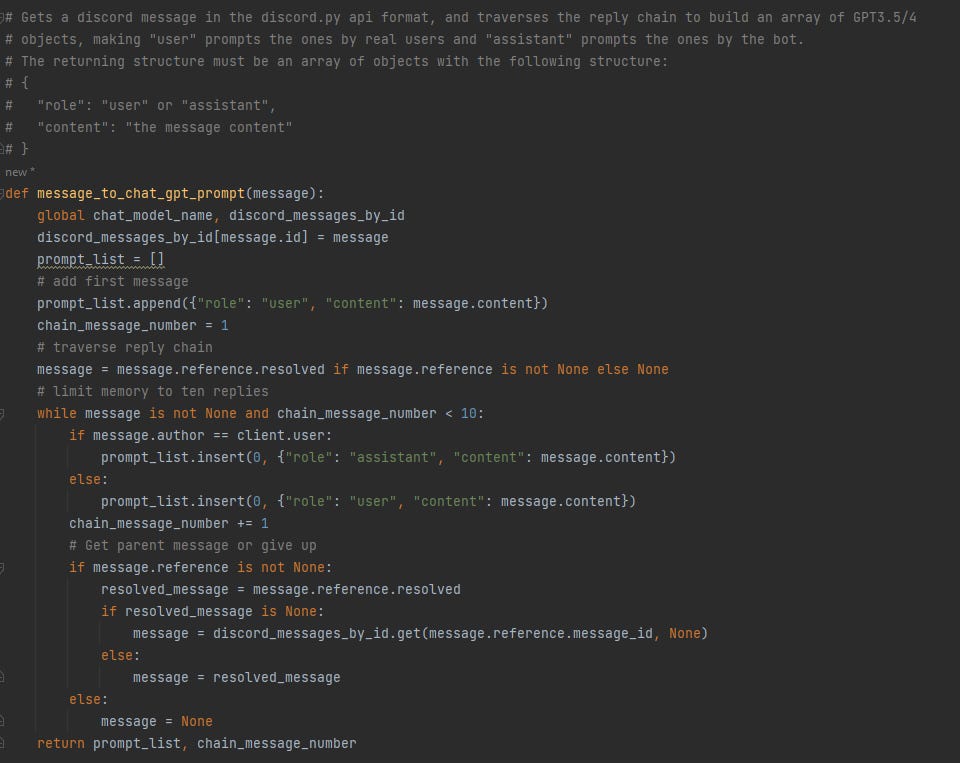

Other models deal with this easily, mostly by doing my job for me. GPT-3.5-turbo (ChatGPT) and later models have a different prompt format that encourages sending previous messages along with the API request.

The following is an entire prompt we’re sending to OpenAI all at once:

{'role': 'user', 'content': "<@872531052420280372> What is the name of Sweet Bro and Hella Jeff's friend?"},

{'role': 'assistant', 'content': 'Sweet Bro and Hella Jeff\'s friend is never explicitly named in the webcomic Homestuck. He is often referred to as "the guy" or simply "the third guy."'},

{'role': 'user', 'content': 'Their token black friend has a name.'},

{'role': 'assistant', 'content': "You may be referring to Tavros Nitram, who is a character in the webcomic Homestuck that is known for being one of the few black characters in the story. However, Tavros is not a friend of Sweet Bro and Hella Jeff, but rather a character in the main Homestuck story. As mentioned earlier, Sweet Bro and Hella Jeff's friend is never given a name in the webcomic."}As you can see, the user replied a second time to the bot with “Their token black friend has a name”, and GPT was able to remember what they were talking about in the first query.11

My method for implementing this (incompatible with the Drewbot in the article, in fact this isn't really a Drewbot at all) is as follows:12

Unfortunately, as you’ve read, base GPT-3 models use a completely different format, and during finetuning I’m only able to send specific pairs of messages and responses. This makes implementing “memory” sound impossible, but there are likely many ways to get around it.

When I fetched the dataset, I could have fetched full conversations between Drew and other people, instead of stopping after the first set of Drew responses.

Then, I would have to assemble those chains in a consistent format.

Finally, from there on I’d have to contact the API with the same format, containing the current reply chain as I see it, including previous prompts.

This would be a huge pain in the ass, though. I had trouble dealing with separators as it is. It would also drive the costs up massively. Prompts would likely balloon to x10 the size at least, and I don’t want to spend a thousand dollars on a hobby.

Conclusion

I hope this is good inspiration to any potential bot developers out there, or people experimenting with LLMs. I find the whole field and the current leaps in AI capabilities fascinating.

I highly recommend you get into this little world while you can. I feel like at best it’s a good career move, at worst it’s just fun.

Incidentally, if you hire remotely worldwide and work for a company doing state-of-the-art stuff, get in touch. As you can see from this article, I’m something of a state-of-the-art myself (we all know lazily contacting OAI’s APIs is at the core of all successful LLM startups in 2023).

If you don’t know the name, it’s a multimedia webcomic that was popular in the early 2010s. You can learn more about Homestuck here.

See second disclaimer above.

We’re probably like a year away from OpenAI being able to dynamically handle this stuff without any manual work. In fact, you could argue all it would take is a Discord ChatGPT plugin to do all this natively.

I had never seen this format in the wild, but it’s a JSON format that forces you to have one entity per line, to streamline parsing.

I wrote it down. 7 minutes for it to even enter the queue, 35 minutes to start training, ~23 minutes per epoch out of 4 epochs.

It gets even ridiculously cheaper with ChatGPT models, but you can’t finetune those.

I quote, “sampling temperature to use, between 0 and 2. Higher values like 0.8 will make the output more random, while lower values like 0.2 will make it more focused and deterministic”. TL;DR: how close to the finetuned answers it should act, the lower the closest.

https://en.wikipedia.org/wiki/Scunthorpe_problem. Look, perfect censorship is out of bounds for this little project.

I’ve failed to mention that OpenAI return a bunch of possible responses to your prompt. It wasn’t particularly relevant to me, because I don’t have a heuristic to choose between them. I simply return the first option.

Of course, it fucks up basic Homestuck knowledge. So much for the singularity!!!11

“Wait, couldn’t you simply keep getting message references, why are you using an in-memory dictionary?” Yeah, that was my original algorithm, and it repeatedly crashed into draconian Discord API limitations. It will refuse to fetch more than two or three levels of replies, and would consistently end the chain at an “unresolved” message reference, that thankfully does include the theoretically resolved message id.

You don't have to take Makin's word for it, Drewbot's resemblance to me is both fascinating and eerie. I've seen examples of AI being used to impersonate people before, but nothing drives it home like having it done to you.

I didn't really know anything about the technical aspects of making this work until reading this article though, it makes me appreciate the effort all the more. Makin likes to tell people to learn to code constantly, and I think he may genuinely perceive it as being easy enough for anyone to pick this stuff up, but it's beyond me. Maybe programmers WILL rule the world eventually.

I honestly think that, if you took a random sampling of posts from me and Drewbot and asked people to tell which is which, they would be stumped. Then again, Drewbot is much funnier than I am, so perhaps it wouldn't be that hard.

There's actually an Imgur link containing Drewbot's "best-of" responses, but I'll refrain from posting it here in case Makin is afraid of all of those spicy responses from before he beat the offensiveness out of it. It's freely accessible on the Discord mentioned in the article though, just ask and we'll point you towards it if you're interested.

He'll make you read his shill list in return, but that's not such a bad trade.